Attention Is All You Need !

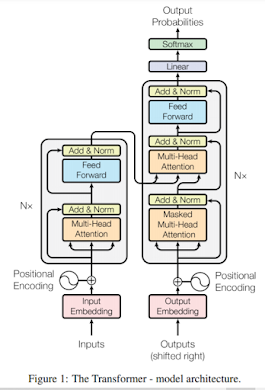

Attention Is All You Need – Paper review This article gives a quick 2-minute understanding of transformers intuitively. For in-depth understanding please refer to the paper in the reference given below. What did the author try to accomplish? One of the main problems of using traditional encoder decoder models with RNNs in the encoder part is the sequential processing of input in the encoder model which does not allow parallelization of processing the input. The authors tried to address this problem of sequential processing in these encoder decoder models using a new Transformer architecture. The architecture of this proposed transformer model is shown below. It has two modules, encoder and decoder. But the encoder in this architecture takes the entire input for processing in each step, but not token-by-token across time while using RNNs. This solves the problem of sequential processing in encoders. The other major change in this architecture is the removal of RNN ce...