Attention Is All You Need !

Attention Is All You Need – Paper review

This article gives a quick 2-minute

understanding of transformers intuitively. For in-depth understanding please

refer to the paper in the reference given below.

What did the author try to accomplish?

One of the main problems of using

traditional encoder decoder models with RNNs in the encoder part is the sequential

processing of input in the encoder model which does not allow parallelization of

processing the input.

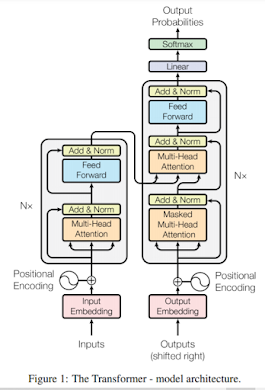

The authors tried to address this

problem of sequential processing in these encoder decoder models using a new

Transformer architecture. The architecture of this proposed transformer model

is shown below.

The other major change in this

architecture is the removal of RNN cells and using attention mechanism

only. This is because at every time step the entire input is provided to the

model.

This new transformer model,

dominated the sequence-to-sequence models and opened up a new era of deep

learning and soon transformer-based models started taking the crowns as state-of-the

art models in the domain of NLP.

What are the key elements of the approach?

1.Self attention and Multi-head attention:

In self attention every token of

the input is compared to every other token to get the attention scores. This is

helpful because every token in the input might be related to other tokens in

the input. Using multiple transformation to catch different kind relations led

to multi-head self-attention. Using of this self-attention led to some serious

time complexity issues which is later addressed by another architecture called

Reformer.

2.Positional encoding:

This is used to encode the position

information of each of the tokens of the input and the partial output given to

the model.

To learn more about this topic

refer to the paper for better understanding.

References:

1. Waswani, A., N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin. "Attention is all you need." In NIPS. 2017.

Comments

Post a Comment